通过 MATLAB 实现基本的单变量线性回归,并绘制梯度图。

这是本学期我的 ML 实验报告。实验报告的题目为 Andrew Ng (吴恩达) 的 Stanford Machine Learning 课程。实验报告编号可能与课程页面的编号略有差别,但问题不大。

# Experiment 1: Linear Regression

This is the report of Experiment 1: Linear Regression.

# Purpose

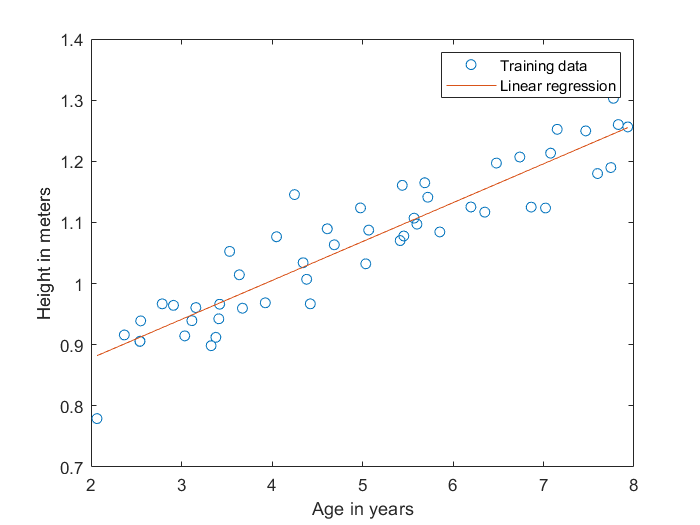

In this experiment, we have the data of ages and heights. We want to find the connection of these two groups of data.

# Hypothesis

We hypothesize that ages() and heights() are linear dependent.

# Procedure and Results

We implement linear regression using gradient decent. The gradient decent update rule is

We let learning rate .

After the first iteration, we got the value of theta is

After iterations, the value of data is

The figure of the result is

Using the model, we can know that while the age is , the height is

When the age is , the height is

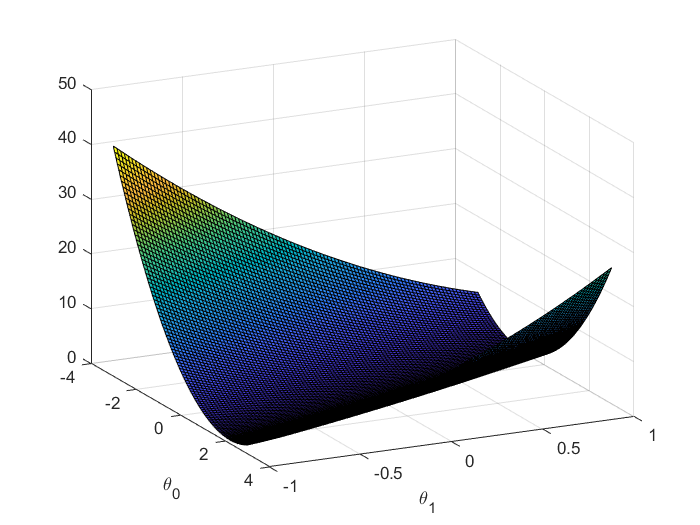

Then, I draw the figure to visualize the relationship between and , let

The figure is as follow: