探究正则化系数应对欠拟合、过拟合的效果。

# Experiment 6: Regularization

This is the report of Experiment 6: Regularization.

# Purpose

In this experiment, we want to find the purpose of regularization term on underfitting and overfitting.

The first group of data is a regression problem with single argument. We will try fitting a 5th-order polynomial to a data set of only 7 points.

The second group of data is a classification problem with logistic regression. We also want to find the influence of regularization parameter on underfitting and overfitting.

# Hypothesis

For the first sub-experiment, our hypothesis will be

For the second sub-experiment, our hypothesis will be

# Procedure

# Regularized Linear Regression

With the hypothesis

we can get the cost function with respect to :

where is the regularization parameter.

Using the normal equations

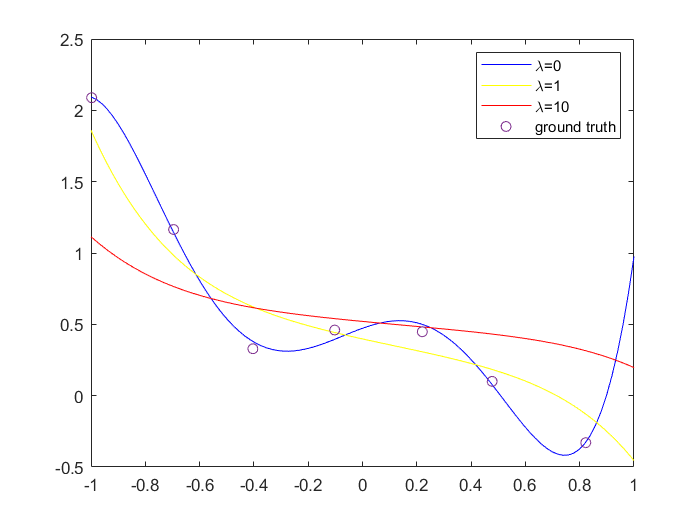

When , we got the figure as follows:

# Regularized Logistic Regression

With the hypothesis

the cost function in regularized logistic regression is

The Newton's Method rule is

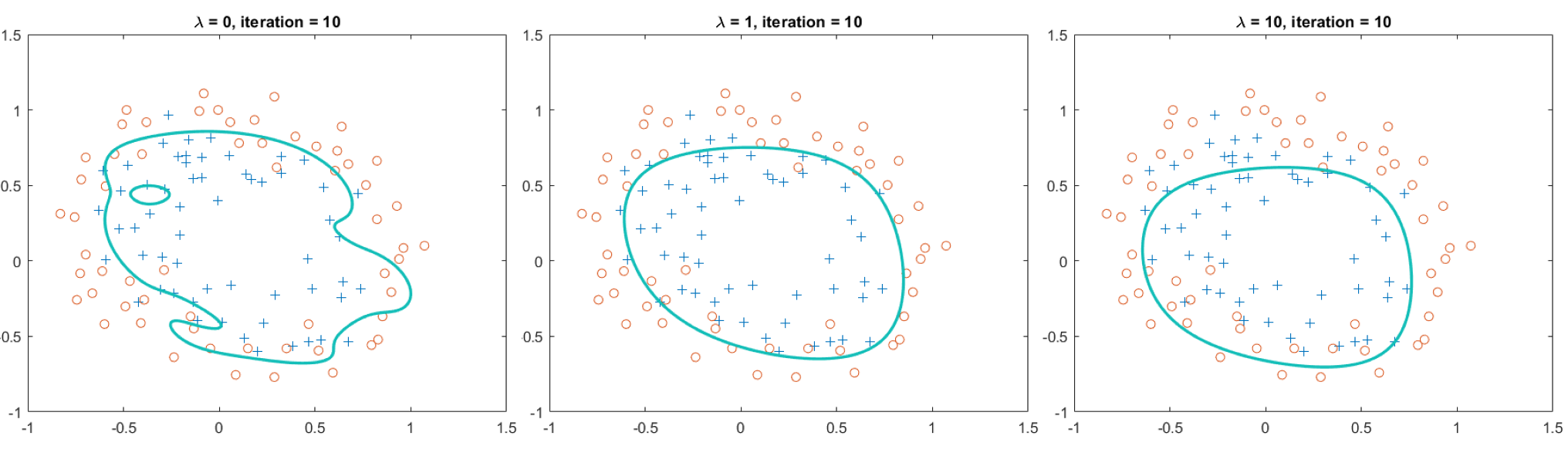

With 10 iterations, we get the results as follow:

# Answer of the questions

# Question 1

From looking at these graphs(in Exp6-1), what conclusions can you make about how the regularization parameter affects your model?

I found that the larger the , the smoother the curve of the polynomial, which means the polynomial is approximating a line.

# Question 2

How does affect the results?

Not only affect the shape of the curve, it also affect the topological structure of the curve. For example, there is a hole when , and the hold disappear while or .